While many of us are aware about Google Search Engine and related terms, most are unaware of the finer details. Here is a good look at the actions linked to the terms. Crawling, indexing and ranking are essentially the manner in which Google looks up for pages that are newly created and added. The content in newly created websites, pages are added to Google’s database which are then displayed in response to a query by a user.

Every day, globally, billions of questions are asked on various search engines. With 92% market share Google is the market leader, while the other search engines compete for the remaining 8%. Bing has a share of 3%, while Yahoo and Yandex command 1% each, with other search engines taking up the rest.

These are the numbers. Let’s take a look at how search engines get answers to queries

Crawling, indexing and ranking are responsible for the manner in which answers are displayed on screen in a particular order.

Note the word “particular order”. It is this order that will determine your site’s popularity, and we will read all about getting your site up on top through search engines.

How Do Search Engines Work?

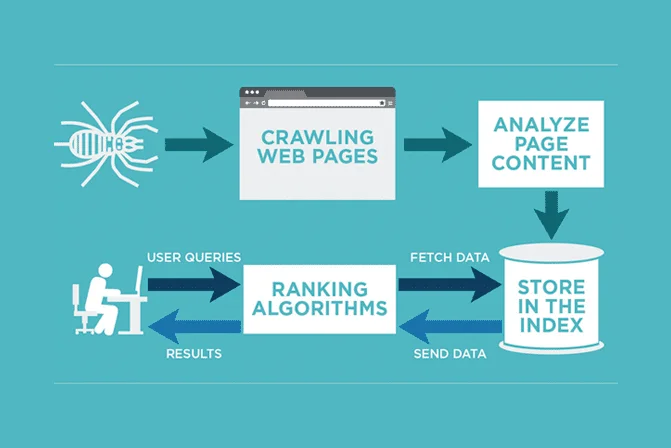

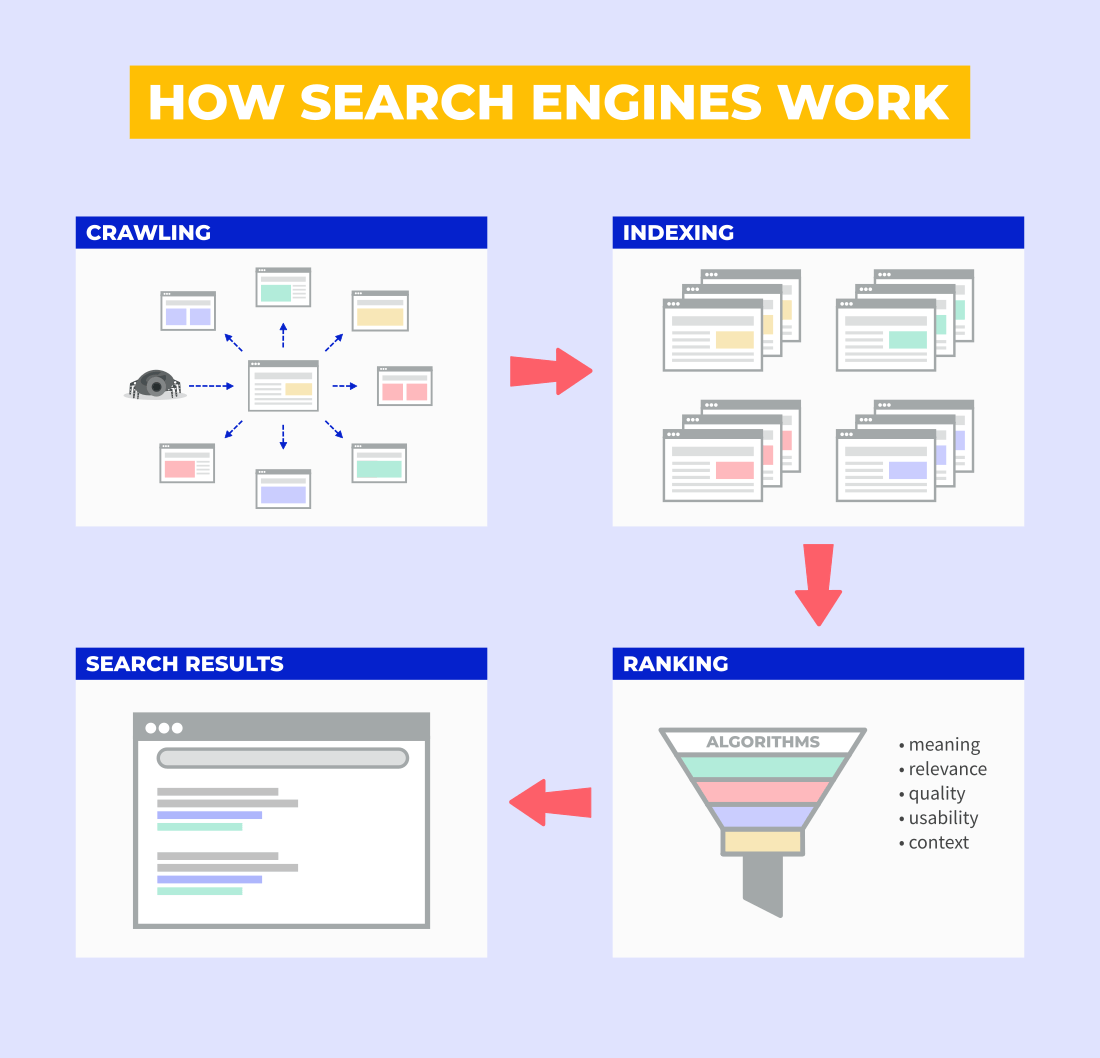

Typically, Search Engines perform three different functions in a structured manner, or a process.

#1 Crawling – This refers to the bots that are called Search Engine Spiders that virtually crawl all over the internet, looking for pages, content. This also involves getting redirected to pages through the links in existing pages.

#2 Indexing – After crawling, the pages that are revealed to search engines are then added to their respective databases. For instance, pages crawled by Google are added to the Google index.

#3 Ranking – Next in the process is ranking. The pages are ranked on the basis of various factors, and this includes more than 200 factors that Google uses to rank a page.

So, let’s deep dive into the first of these, Crawling.

What Is Search Engine Crawling?

Automated bots known as crawlers and spiders carry out continuous searching of the internet for websites, pages, including updated content and newly included content. The types of content that are crawled tin this manner include

- Entire web pages

- Text content

- Images with tags

- Videos with tags

- PDF files, and

- virtually content in any form

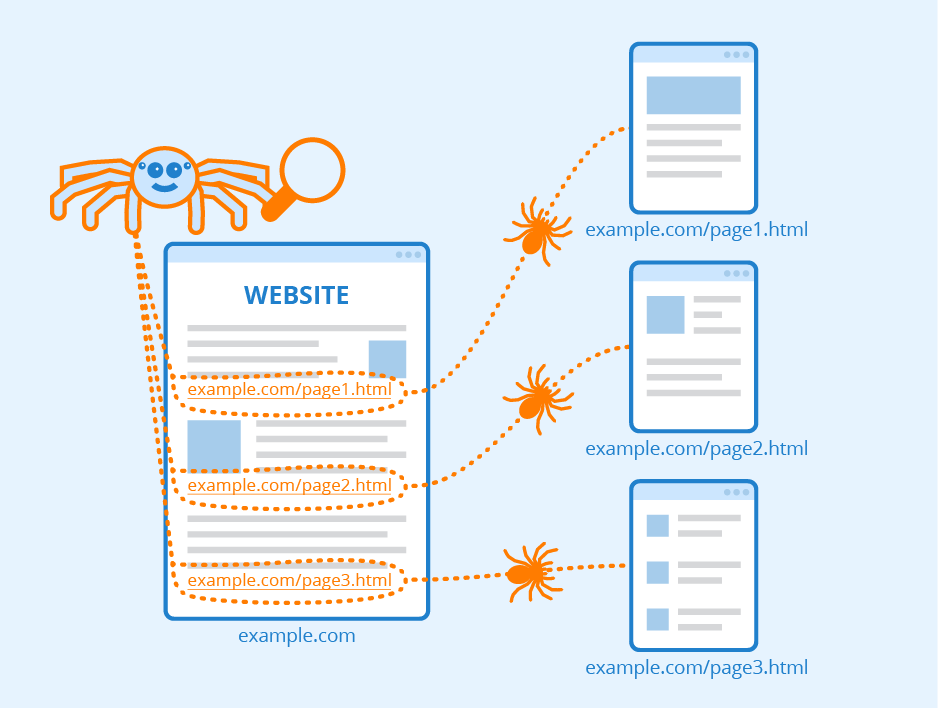

The function of search engine spiders is to crawl and look for content, regardless of the format, by following links. It all starts with the bots crawling pages, after which the bots follow the URLs in the links in the pages, and there through other links to other pages.

As outlined earlier, whenever a new page is uncovered, it is appended to the database; in Google, the pages are appended to Google Index.

This crawling is performed in two different manners – Discovery Crawl and Refresh Crawl.

As can be seen from the names, Discover Crawl refers to finding new pages, while Refresh Crawl updates the index with changes made in existing pages.

Now, assume that Google had earlier indexed your main pages.

Bots will carry out a refresh crawl periodically, and upon finding new links, the discovery crawl is triggered to follow the pages from the new link.

John Mueller from Google, gave an insight into this, “The refresh crawl automatically goes through the homepage at a particular frequency. Once a new link is uncovered on the homepage, the bots follow the links, and the discovery crawl is triggered to also check out the new pages”.

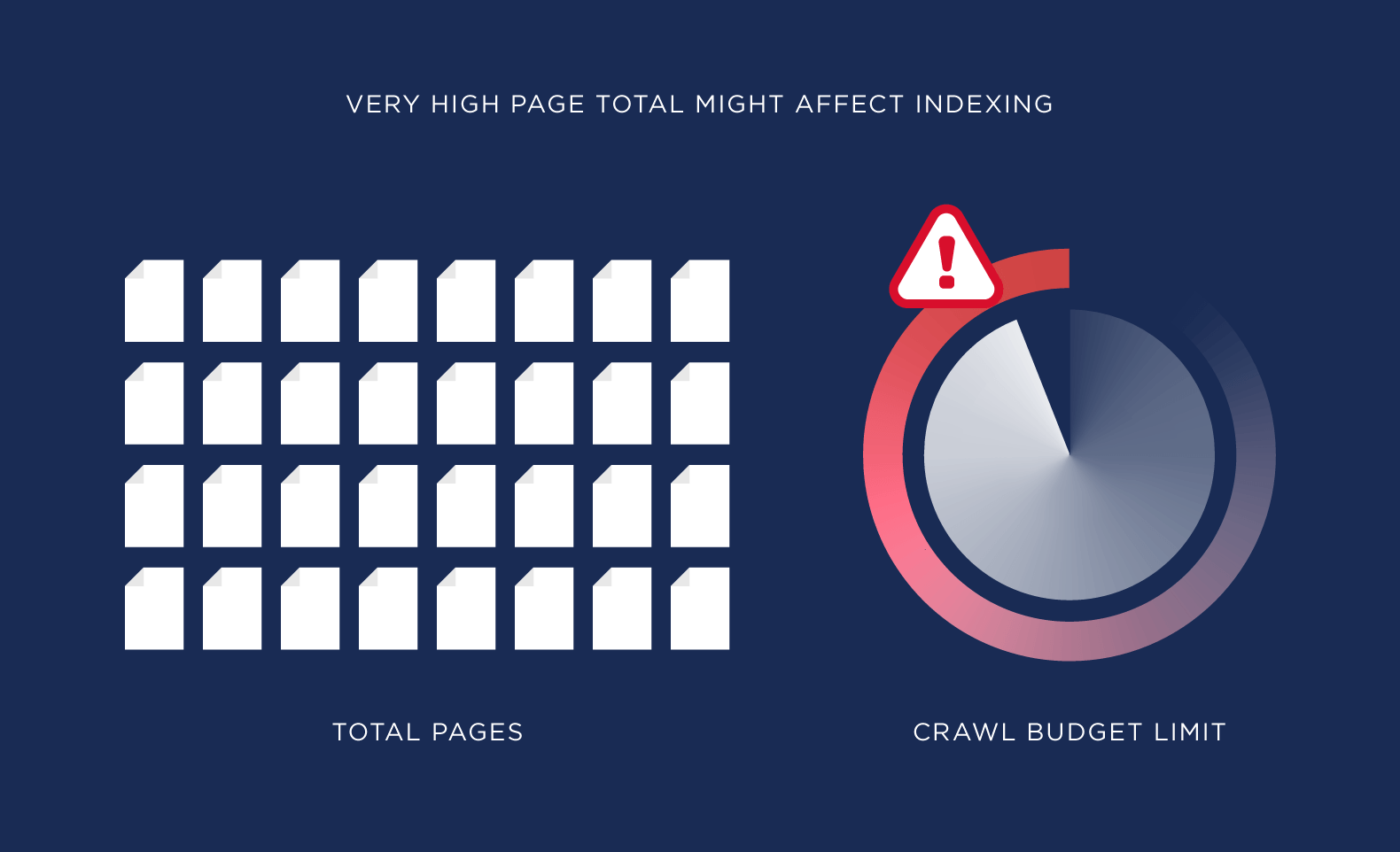

What Is A Crawl Budget?

Okay, so that’s behind us. As you can see, you will definitely want Google or any search engine to uncover all or most indexed pages. This is where the crawl budget comes in to play.

So, what is crawl budget?

This refers to the number of pages that bots will crawl within a particular time frame.

So, essentially, when your crawl budget is spent, the bots will not crawl your site.

This crawl budget is something that is calculated by search engines, and this differs for different websites. So, how do search engines come up with the crawl budget?

Two factors drive the calculations at Google – crawl rate limit and demand.

Crawl Rate Limit – This essentially means the speed with which Google can access or retrieve a site’s content without any impact on the performance of the site. The best way to get a higher crawl rate is to rely on a responsive server.

Crawl Demand – This refers to the number of URLs that are followed by the bot on a single crawl, and this is driven by demand. Demand hinges on the need for either indexing or reindexing of pages, apart from the site’s popularity.

How Does Crawl Efficacy Matter?

Contrary to perceptions, crawl budget is not the only important action. There’s more, with greater relevance.

For instance, when you look only at the crawl budget, all that you are concerned about is the number of your web pages crawled.

Question is, why would you even want to be concerned about pages that have not been modified, after the previous crawl?

No benefits, right?

This is exactly why you need to look at crawl efficacy.

Ok, So What Exactly Is Crawl Efficacy?

As the name suggests, this refers to the speed with which search engine bots can crawl pages on a website. You could also look at it this way – crawl efficacy is also the time between the creation of new pages/updates to existing pages and the bot crawl.

So, effectively, crawl efficacy is better when a fresh visit or a repeat visit of the bot occurs faster.

Now, do you know how to determine the time or frequency of Google spiders going through your page? Well, you can check out he reports in the Google Search Console.

How Does One Get A Website Crawled Properly?

If you are in doubt if your website is crawled properly by Google, here’s how you do can it.

Sitemap

This is essentially a listing of the pages on your website; and these are actually the pages that you would want Google to know.

So, the ideal step is to create a sitemap and submit it to Google through the Search Console. This way, you will get Google’s bots to run through pages that you consider as important, and need to be noticed. This actually establishes a short route or path to the most important pages, which the bots can easily follow.

Robots.txt

Now, there are portions of your site that you may not want Google to crawl, so that the bots can focus only on the important pages. To do this, you can use a robots.txt file to list what’s not important.

Internal Links

There are other important factors that contribute to efficiency.

Search engines also follow links on a site, to reach other new pages within the site.

Therefore, when you put up a new page/draft fresh content, ensure that you place a link on a relevant page on the site, to the new page or content. By doing this, the bots will uncover the new pages/links at every visit.

Backlinks

These are links from a third party website to your site. In other words, they are from external sites, that host content related to your domain or niche. When the bots crawl the So, when the Googlebot crawls the content on those sites, the links from the pages will redirect the search engines to your site or pages. These links are called backlinks, and are different from internal links.

Paywalled Content And Impact On Search Engines

Many websites are known to have gated content, wherein the user is expected to pay or subscribe for specific content. Bots do not look up content or pages that have restricted access.

Therefore, if you have a few pages that are very important, it would be a good idea to keep those pages out of your gated or paywalled content.

Indexing

What is a Search Engine Index?

This refers to the database of search engines, where the information compiled by bots are stored. This database is called the search engine index and it is actually a list of the web pages uncovered by the bots.

Is Search Engine Indexing Important?

To answer the question, it is important to know what happens when a user enters in a query on the search bar. Every time a query is entered, the search engine checks out the index to retrieve information that is relevant or matching with the query.

Therefore, if you want your page to be displayed when a query relevant to your page is entered, that page needs to get on the index.

All pages that are crawled by the bots get on the index. But there are various factors that will impact the positioning of each page on the results to the query (this is the Search Engine Results Pages – SERPs). A separate subsequent section offers detailed information about SERPs.

Time Taken For A Page To Be Indexed?

Let’s assume you have launched your website; you will want your pages to be indexed at the earliest so you get a digital presence quickly.

However, this could sometimes take anywhere between a few days to a few weeks. This could be frustrating, right?

Well, you could do something about it.

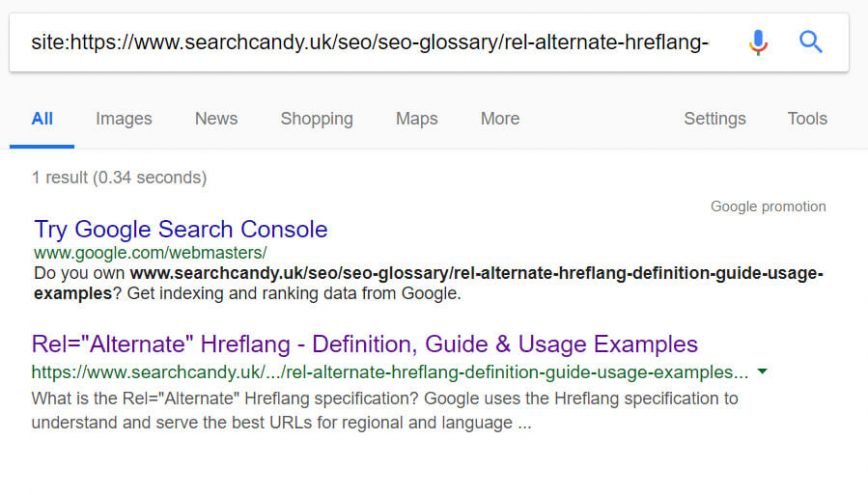

Check If Your Page Is Indexed Or Not

The first step is to check if Google has indexed your page. Get on Google and carry out a search with the following format; site: yoursite.com.

After you enter this, Google displays indexed pages of your site.

In the event that no results are displayed, it indicates that Google has not indexed the site. Here’s another scenario – some pages may be displayed, but some may not be displayed. This indicates that those pages are not indexed.

Another method to check if your page is indexed is to lookup Google Search Console.

Tips To Getting Your Content Indexed Quickly

If you want your content to be quickly indexed, here’s how you could do it.

Impact Of Low-Quality Pages

Bots crawl all pages, and this includes the pages that are of low quality. When the bots crawl through these pages, you will eat up your crawl budget, and this will have an impact on crawl efficacy.

Therefore, remove low quality pages.

This will get Google to look up and index pages that are important, and will improve crawl efficacy.

Orphan Pages

Did you known that search engines leave out orphaned pages?

As outlined above, Google uncovers new pages through links. Let’s say you have a page that does not have links; the page is essentially orphaned and the bots will not reach the page.

So, ensure that your content is linked to relevant pages. Plan your site architecture accordingly.

Request Indexing Through The Google Search Console

If your page has not been indexed by Google, you can manually request index of your site through the Search Console.

One of the reasons for the need to get indexed by Google is the fact that Google dominates search engines. But it is prudent to get indexed by other search engines also – Bing and Yandex.

When you get indexed on various search engines, you will be effectively opening up different channels or pipelines to your site.

Ranking

What is Search Engine Ranking?

As mentioned earlier, after your site/pages have been crawled and indexed, they are then ranked by the search engine. This is driven by various factors and algorithms.

You may have probably seen Google algorithm updates frequently. Well, that is one of the factors, and this keeps changing, as an update to improve the manner in which search works. As mentioned earlier, there are more than 200 factors that Google considers when fetching and positioning results in a particular order.

It is therefore important to get your site aligned to these parameters for a better ranking. This in turn will help your digital presence.

Boost Search Engine Ranking

On-Page and Off-Page Optimization

There are other elements that are extremely important, such as on-page SEO, meta tags and headings. Additionally, you also need to ensure that content is optimized and off-page SEO elements are in place. This includes backlinks that have a significant impact on the rankings of your website.

When your website lists higher on search results, the probability of users clicking are high, and this in turn helps improve organic traffic.

Content Quality And Relevance

The quality of the content on your site is of paramount importance. You need to have content that is of high-quality, that engages users. Question is, how do you know or predict the type of content that users will like or content searched by users?

You need to have a plan in place to carry out a detailed, focused and nuanced keyword research that will narrow down the queries related to your business and domain.

Keep working on your keyword list till you have a list that shows search volume and the keywords used by your competitors.

The next step is to create content that revolves around those keywords. You could create well-crafted blogs or you could post social media content with those keywords.

Remember, your content needs to offer a clear solution or ideas to readers who use those keywords. For instance, a user may be looking for some details through a particular keyword. Your content that is based on the keyword needs to offer those details clearly, thereby establishing a clear link between the keyword and the content. This will help create a reputation as an expert in the particular domain or sub-domain.

When you establish yourself as an expert, you will find your rankings ascend on SERPs.

User Intent

What is the purpose of search engines? As we all know, search engines help users to get results to questions. So, the focus of your efforts should be to create content or offer solutions that are user-centric.

Imagine you are in the business of online sales of mobile phones. You may then need to be ranked high when a user enters this keyword – “how to buy the best smartphones”. You will need to draft a high quality blog or content in some format that offers the right tips and takeaways on how to purchase the best smartphone.

Many are known to try and exploit the keyword to drive traffic to e-commerce portals. This will not have any positive impact on the site ranking. A user who uses this keyword, is essentially looking for an answer, and he is not looking for a commercial listing of smartphones.

He is looking for information about how to choose a smartphone from various options.

It is therefore necessary to understand the intent of the user and ensure that the content is user centric.

Technical SEO

The technical part of SEO is of equal importance in rankings. For instance, remove all content is duplicate, and pay close attention to canonical tags. Other details that need to be ensured or verified include schema markup, and working on optimizing page loading speed. All broken links are to be fixed, and routinely checked, apart from testing redirects.

Technical SEO is important and this helps the search engine rank you better, and also help users have a good experience on site.

Mobile Responsive And Indexing

Google’s decision to give priority to mobile first indexing, makes it necessary for you need to optimize your site for tablets, smartphones.

While search engines will essentially evaluate the desktop version of sites for the purpose of ranking, the absence of a mobile version, will negatively impact ranking.

Assume that you have a site that is not mobile optimized. A similar site of a competitor with mobile-first indexing in place will actually receive a better ranking from Google.